Defining Quality

What is quality? And what does quality mean in the context of a software project?

Why Quality?

Software products are complex. More than just a manufactured object, a software product is actually a software system, a complicated interrelationship between different software bits supporting some kind of behavior or interactional affordances. I don't want to define software here, I just want to be clear that software isn't like a coffee mug, that you can hold in your hand and fill with green tea: software is a crazy-complex system.

It's a pretty widely-accepted truth that software should be tested, and it's assumed that the reason you test is so that you know the software works [1]. The definition of quality drives the "how" and "why" of testing.

Testing is an activity, and it can be performed by anyone. You do not need a great deal of technical competence or experience to "do" testing; if you have a customer-facing software product, your customers are exercising the product and providing test coverage for you.

Using testing in a structured and intentional way as part of a quality strategy provides a great deal of feedback on how the software product works. So, definitely do that. But think deeply about what quality means, and agree as a team and company about that meaning.

Definitions of Quality

Every QA and test professional comes to the point where they grapple with the meaning and definition of "quality", and many of them post articles and reflections on this grappling on the web. When they write and publish books, they address quality. A good place to start reading about formal, authoritative definitions of quality is the Wikipedia page on Software Quality [2]. I grappled back in 1999: Understanding Quality.

I'm going to pull out and generalize two examples of definitions of quality:

- quality is the absence of defects

- quality is meeting the customer's needs

The first, no defects, is clearly aspirational but it's easy to understand, especially for developers. The more tests you write and run, the more chances of finding defects. You can even follow a methodology like test-driven development to centralize tests in your workflow.

The second, meeting customer needs, is also pretty clear, but it's a little harder to accomplish for developers. Yes, you can get all formal about somebody writing user acceptance tests to provide an example proof of what the code should do, assuming the user acceptance test correctly and completely models the customer's needs. But, does a putative understanding of a customer need make it clearer what kind of test the developer should write to ensure the code behaves appropriately? Maybe, but the scope of what a defect can be has certainly expanded.

There are some problems with defining quality and testing in support of that quality:

-

A software product sits in the center of a network of relationships and responsibilities: developers and customers are very different stakeholders for the product. This means that the purpose and content of tests will vary.

-

The biases of the people defining and writing tests will obscure other important quality definitions. For example, developers often show a bias for the efficiency of the development process: unit tests tend to be confirmational and optimized for speed of execution.

Defining Quality Through Stakeholder Relationships

I believe that a better understanding of the software quality of a product comes from explicitly considering the stakeholder relationships to that product.

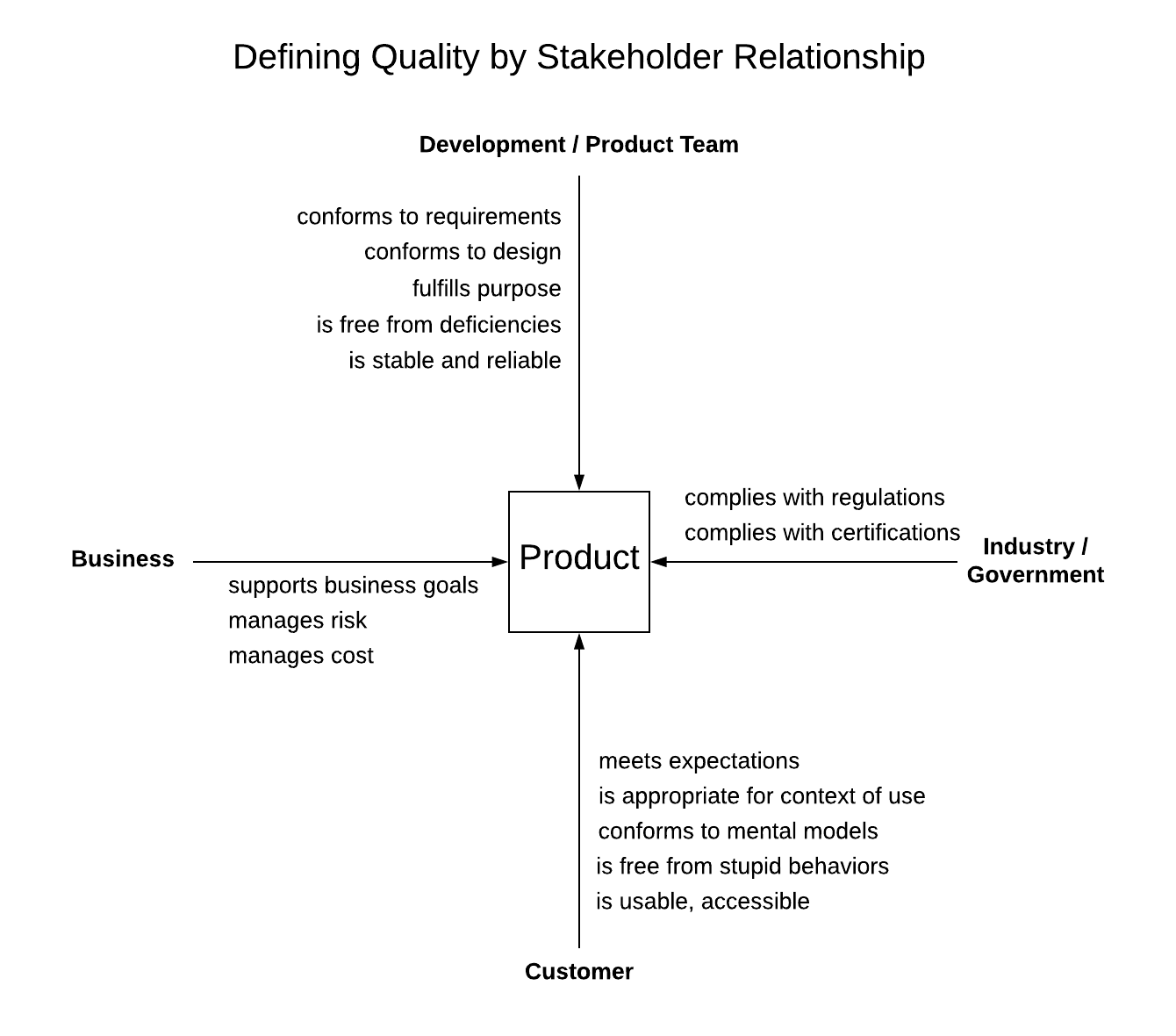

I find it useful to consider four stakeholder groups, paired on two axes: the development team opposite the customers, and the business entity opposite the government and the industry. Put the product in the center and cross the axes, and you get:

Each stakeholder group has an arrow pointing to the product at the center of the diagram, and along this arrow are some common quality definitions relevant to that relationship. I used this structure because I find the symmetry and the axis pairs meaningful and useful as discussion prompts; it's a useful visualization.

In my experience agile development teams tend to live on their relationship arrow, and focus on the implementation details. The code has to work, and has to conform to the product design and requirements. On the other end of this axis, the customers expect the product to meet their needs and to work like they expect it to; using the product has to be low friction, has to have low cognitive dissonance, and has to not surprise in a bad way.

The other axis has the business on one end, opposite the larger reality of the government and any industry groups or authorities. Business define quality in terms of business needs and goals, while the government sets regulations on how the business can behave.

In the pursuit of quality, the perspectives of stakeholders has to be factored into the definition of quality. This definition in turn has to be considered when defining a test strategy.

Where does the role of quality assurance fit in to this scheme? In my experience, QA engineers by default are closely coupled with the development team, supporting the software implementation [3]. The QA team should be focusing on the stakeholder relationships in order to institutionalize a higher-level understanding of the big picture for defining quality. The QA team should be using tools like this visualization to lead discussions about what quality means, and how to define tests that take into account those definitions.

Notes

-

I say "assumed" because different biases are in play when it comes to software testing. Some people think a great deal about the context of testing, and may have specific and intentional ideas about the reason for testing. Others may not have thought deeply about this, and so have an implicit understanding, which is an assumption.

-

The web changes, sites disappear, and posted content changes. I hope this wikipedia page hangs around, but the content will surely change.

-

Titles are weird. The term "engineer" doesn't really apply to anyone in the software business. In the QA world, "engineer" often means a role more technical than an "analyst", sometimes with pretensions of writing automation code. "Tester" is no less problematic, because quality assurance covers much more than the mere execution of the activity of testing. I'm just going to stick with "QA Engineer" for now.